I wanted to make a quick point about the current trend in console gaming. This generation of consoles (i.e. the PS3 and Xbox 360) have all touted ‘High-Definition’ as their key selling point and progression point over the previous generation. Both of these consoles are capable of outputting at 1080p resolutions.

As this generation seems to be nearing the end of its lifespan, there are a couple of things that I’ve noticed.

Resolution

It seems that the vast majority of games are rendered on consoles in either 720p or ‘sub-HD’ resolutions (which are lower than 720p). I suspect this is due to a combination of at least two factors. Firstly, despite the strong hardware specifications of the consoles at release, games technology has progressed to the point where console hardware simply does not have the raw horsepower required to render graphics at high resolutions without a serious performance hit. This is a problem that cannot really be solved because of the fact that consoles have to stick with the same innards for their entire lives.

The other reason may simply be laziness on the part of developers. We have seen games like Gran Turismo 5 achieving incredible amounts of performance on the PS3. However, this game took a huge amount of time and a lot of money to develop – something which a lot of developers do not have. The latter is especially telling when companies are churning out incremental updates to franchises every year (think FIFA and Call of Duty). Unfortunately, because this is where the big money is, developers are much more willing to maintain the status quo and not really expend much effort to push any boundaries, simply because there is no financial incentive to do so.

The thing that I’ve realised the most whilst playing many of these games is that graphical sophistication isn’t much of an issue to me. Judging by the success of the Wii, it probably doesn’t concern more casual gamers either. I would rather take a game with lower graphical standards but with a higher resolution. In my opinion, acuity is more important from a functional perspective than perceived realism. Great games are great games at the core. This is why a lot of PS2 games that have seen HD face-lifts have been popular. The graphical complexity may be lower than today’s standards, but make a hit PS2 or PSX game sharp enough to run in 1080p and I would be sure that the game would still be a hit today.

Frame Rate

Coming to the main point then, although I think resolution is more important than graphical quality, I think frame rate is more important than both.

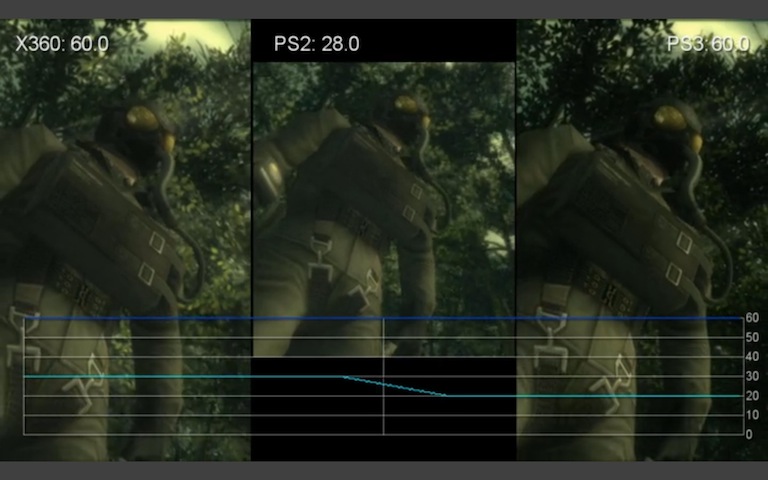

Have a look at the first video at about 1:11, and the second at 4:41.

Ignoring the fact that the resolution in the second is clearer because the game has been upscaled to 720p, notice the added smoothness when the camera pans in first-person view (admittedly it’s a bit tricky to see in a YouTube video that is probably rendered in 30fps anyway!). This is because the original MGS3 ran at 30fps on the PS2, whereas the HD version runs at 60fps on the PS3. Having played the original to death, playing the HD version proved to be a great experiment. The added acuity was nice, but by far the biggest improvement in the game was down to the improved (and consistent) frame rate. There’s almost no visual ‘tearing’ when the camera is moved quickly and almost no stutter in busy sequences. The controls feel much more responsive as a result – you don’t get that feeling of ‘lag’ as the screen catches up to your movements.

And this made the game infinitely more ‘playable’. I didn’t care that the grass doesn’t look as realistic as in the latest Crytek games, or that some of the textures look blocky. I just liked the game responding accurately to my commands, and not having the distinctive blur and tearing that you get with low frame rates.

Yet, the vast majority of games on the PS3 and 360 run at a maximum of 30fps (and if you’ve played Skyrim on the PS3, that ‘maximum’ is almost never attained). When there are large action sequences, the rate often slows and games end up stuttering. It’s quite frustrating, and I think that this should absolutely not be allowed to happen. Because developers are making games for multiple platforms, they tend to aim high and then ‘cut down’ the performance to suit inferior hardware. But the experience on inferior hardware ends up becoming inconsistent.

It would be far better if developers made sure that games have a minimum rather than a maximum frame rate. Also, I’d like to see far more games running at a constant 60fps. I think this is vastly more important to the overall experience than maintaining flashy effects on hardware that is inevitably going to struggle with it if it is not coded efficiently.

PC users have had the luxury of being able to tweak their games to achieve a balance between performance and visual quality. Unfortunately, console gamers are stuck with the lowest common denominator in many cases. My key message here is that smoothness and responsiveness trump ‘being pretty’. Nice graphics look great in still images, but games are dynamic. The philosophy around game design maybe needs to take this into account a little more in future than they seem to do currently.